Text Classification: Simplified

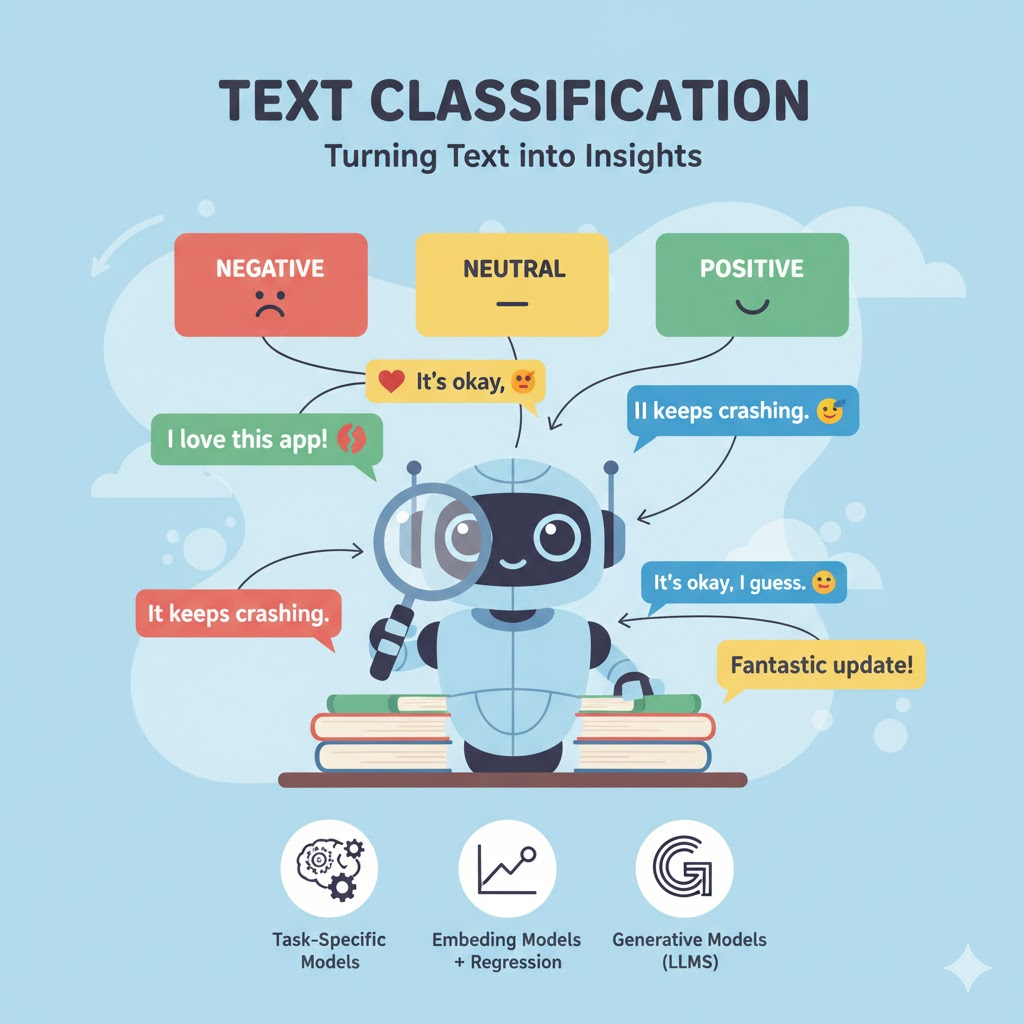

Text classification is the process of assigning predefined categories or labels to text data. A common example is sentiment analysis, where we determine whether a piece of text expresses a positive or negative emotion.

In this post, we’ll explore three different methods for performing sentiment analysis on text data:

- Task-Specific Models (Pretrained Models)

- Embedding Models + Regression

- Generative Models

We’ll use the sealuzh/app_reviews dataset from Hugging Face, which contains around 40K training samples and 10K test samples of app reviews.

For simplicity and faster processing, we’ll use a smaller subset of the test data.

1. Using a Task-Specific (Pretrained) Model

Task-specific models are representation models—encoder-only transformers like BERT or RoBERTa—that are pretrained for classification tasks.

A popular example for sentiment analysis is the nlptown/bert-base-multilingual-uncased-sentiment model from Hugging Face.

Though originally trained on Twitter data, it generalizes well to other review data.

Steps:

- Load the dataset

- Load the pretrained model

- Predict using the model

- Evaluate performance

Load the dataset

from datasets import load_dataset

data = load_dataset('sealuzh/app_reviews')

overall_training_data = data['train'].select(range(10000))

training_data = overall_training_data.select(range(9000))

test_data = overall_training_data.select(range(9000, 10000))Load the model

from transformers import pipeline

pipe = pipeline(

"text-classification",

model="nlptown/bert-base-multilingual-uncased-sentiment",

truncation=True,

return_all_scores=True,

max_length=512

)Note: Since some reviews exceed 512 characters, we truncate them to fit the model’s maximum input length.

Predict and evaluate

from transformers.pipelines.pt_utils import KeyDataset

from sklearn.metrics import classification_report

y_pred = []

for result in pipe(KeyDataset(test_data, 'review')):

y_pred.append(int(result[0]['label'].split(' ')[0]))

def evaluate_performance(y_true, y_pred):

return classification_report(y_true=y_true, y_pred=y_pred)

print(evaluate_performance(test_data['rating'], y_pred))The model performs reasonably well for clearly negative and positive reviews, but tends to struggle distinguishing between mid-range ratings (2–4). This is a known limitation when mapping multi-class sentiment to binary outcomes.

2. Using an Embedding Model + Regression

The second approach uses text embeddings as features and a regression model for prediction.

This method is often more flexible and interpretable than a single fine-tuned transformer.

We’ll use:

- Embedding model:

sentence-transformers/all-mpnet-base-v2 - Regression model:

LogisticRegressionfrom scikit-learn

Steps:

- Generate embeddings for the reviews

- Train a regression model

- Predict and evaluate

Encode reviews and train the regression model

from sentence_transformers import SentenceTransformer

from sklearn.linear_model import LogisticRegression

embedding_model = SentenceTransformer("sentence-transformers/all-mpnet-base-v2")

training_embeddings = embedding_model.encode(training_data['review'])

regression_model = LogisticRegression(random_state=42)

regression_model.fit(training_embeddings, training_data['rating'])Evaluate on test data

test_embeddings = embedding_model.encode(test_data['review'])

y_pred = regression_model.predict(test_embeddings)

print(evaluate_performance(test_data['rating'], y_pred))

The embedding + regression approach generally yields more stable predictions for medium-range ratings and can be retrained easily on new data. However, it requires more setup and training time.

3. Using Generative Models (LLMs)

Generative models like GPT can perform zero-shot or few-shot sentiment analysis by reasoning over natural language instructions.

We’ll use OpenAI’s GPT model to predict sentiment ratings between 1 and 5 directly from text.

Example Codex

from openai import OpenAI

client = OpenAI()

prompt = """

You are an expert in understanding user sentiment from app reviews.

Your task is to predict how satisfied the user is with the app, on a scale of 1 to 5, based on the tone, wording, and emotion expressed in their review.

1 → Very Negative

2 → Negative

3 → Neutral

4 → Positive

5 → Very Positive

Here are a few examples:

Review: Great app! Works well on my Galaxy Note 5.

Rating: 5

Review: Crash when set a demodulation! :(

Rating: 1

Review: The app is okay but could use some improvements.

Rating: 3

Now, predict the rating for the following review.

Review:

<REVIEW_TEXT>

Answer format:

Rating: <number between 1 and 5>

"""

def predict_rating(review):

completion = client.chat.completions.create(

model="gpt-5-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant that rates app reviews."},

{"role": "user", "content": prompt.replace("<REVIEW_TEXT>", review)},

],

)

return completion.choices[0].message.content.strip()

if __name__ == "__main__":

test_review = "Crash when set a demodulation! :("

rating = predict_rating(test_review)

print(f"Predicted Rating: {rating}")

This approach is powerful because it requires no training—you simply describe the task.

However, it may be slower and more expensive for large datasets, depending on the model used.

Conclusion

| Method | Type | Pros | Cons |

|---|---|---|---|

| Task-Specific Model (BERT) | Encoder-based | Fast, pretrained, good for polarity (positive/negative) | Limited to fixed labels |

| Embedding + Regression | Hybrid | Flexible, retrainable, interpretable | Requires training & tuning |

| Generative Model (GPT) | LLM-based | Zero-shot, adaptable, intuitive | Slower and costlier |

Each method has trade-offs, and the right choice depends on your data size, latency requirements, and flexibility needs.

If you’re working with large-scale structured datasets and need reproducibility, go with embedding models.

If you’re exploring or need to handle complex, nuanced sentiments, generative models are incredibly effective.