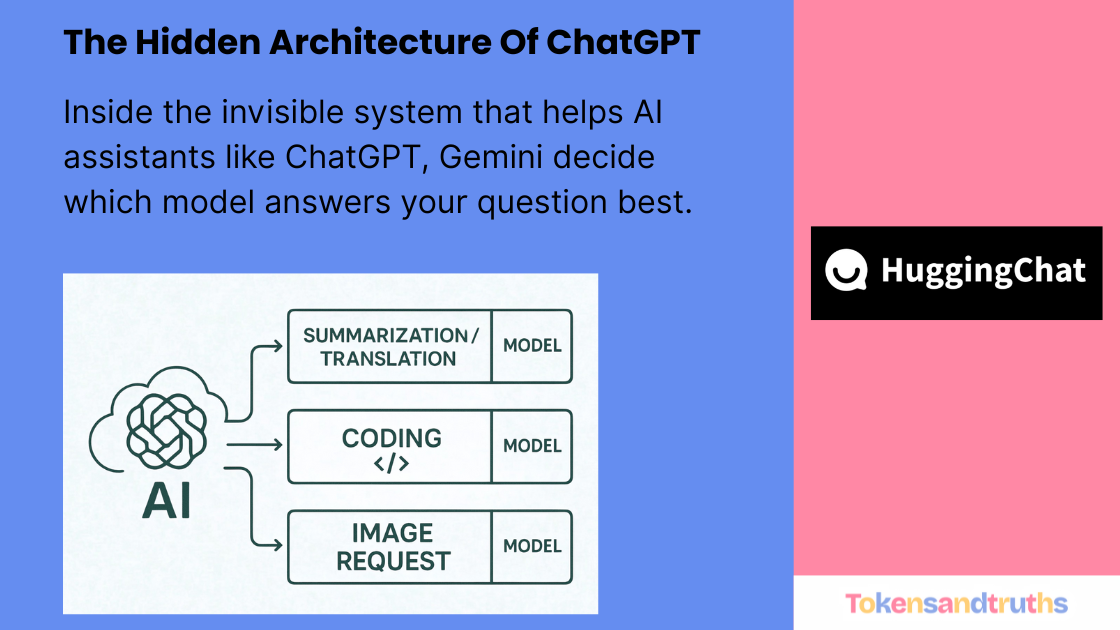

How AI Assistants Like ChatGPT Route Queries to the Right Models

Have you ever stopped to think about how ChatGPT seems to know everything? It can write code, craft stories, create images, analyze data, and even act like an agent that executes tasks. All from a single chat box.

It feels seamless, almost magical. But what’s really happening behind the scenes is something called model routing.

This is the invisible system that powers modern AI assistants like ChatGPT, Anthropic’s Claude, Google Gemini, and HuggingFace’s HuggingChat. It’s what allows them to perform different types of tasks without you ever noticing that your request is being passed around between different specialized models.

From One Model to Many

In the early days of AI, there was usually one big model trying to do everything. It could write text, answer questions, or even generate some code, but it wasn’t great at all of them. As AI grew more capable, companies realized something simple but powerful: no single model can master every task.

That’s when model routing came in.

Think of it like an air traffic controller for your questions. When you type a query, the system quickly analyzes it and decides which specialized model can best handle it.

For example:

You might say, “Write me a Python function to upload a file to S3.”

The system recognizes it’s a coding request and sends it to a model trained for programming.

Or you might say, “Write an Eminem-style rap about AI taking over hip-hop.”

This time, it sends it to a creative writing model that understands rhythm, rhyme, and cultural tone.

Each model does what it’s best at, and the router makes sure your query gets to the right one.

HuggingChat: A Window into Open Model Routing

HuggingFace, known for hosting more than 100,000 open models and datasets, recently launched HuggingChat. It’s one of the first public tools that lets you experience model routing in action, out in the open.

When you type something into HuggingChat, it doesn’t rely on a single model. It picks one from the vast HuggingFace model library based on what you’re asking.

You tried it yourself. When you asked:

- “Write a code to upload a file in S3”

HuggingChat usedQwen/Qwen3-VL-235B-A22B-Thinking, a model built for reasoning and coding. - “Write an Eminem-style rap battling AI taking over hip-hop”

It pickedmoonshotai/Kimi-K2-Instruct-0905, a model tuned for creative text generation.

What’s happening here is exactly what ChatGPT and others do internally, only HuggingChat lets you see which model is actually handling your request.

@ClemDelangue about HuggingChat.

How ChatGPT, Claude, and Gemini Make It Seamless

Unlike HuggingChat, companies like OpenAI, Anthropic, and Google don’t tell you which model is being used. But the process is similar.

Here’s what typically happens when you send a message:

- Understanding the intent

The system first figures out what you want to do. Is this about writing code, generating text, creating an image, or reasoning through a problem? - Routing to the right model

Once it understands the task, it routes your query accordingly.- Coding requests go to models trained on large codebases.

- Writing or story-based prompts go to text generation models.

- Image prompts go to diffusion or transformer-based visual models.

- Research or analytical tasks may go to reasoning models or “agents” that can call APIs or search the web.

- Merging results if needed

Some tasks require multiple skills. For example, writing code and then explaining it. In that case, your request may pass through several models before you see the final output, all within seconds.

So when you ask ChatGPT to generate code, create an image, and then explain it, you’re not talking to one massive model doing everything. You’re interacting with a whole ecosystem of experts, orchestrated behind the scenes.

Everyday Examples of Model Routing

Here are a few examples of how routing might look in everyday use:

| Task | Type of Model | Example Output |

|---|---|---|

| “Write a SQL query to find users with expired subscriptions.” | Code model | SQL snippet |

| “Summarize this 10-page research paper.” | Summarization model | Concise summary |

| “Create a 3D logo of a hummingbird in blue and gold.” | Image model | Generated image |

| “Plan a 3-day trip to Tokyo with food and cultural spots.” | Reasoning and planning model | Day-by-day itinerary |

| “Generate marketing copy for a new AI product.” | Text generation model | Catchy promotional text |

Each of these feels like one assistant doing the job, but in reality, it’s multiple models being coordinated seamlessly.

A Glimpse Into the Future

What HuggingFace is building with HuggingChat hints at where AI is headed. Soon, we won’t need to pick models or tools manually. You’ll simply describe what you need, and the system will find and use the best model for that specific task automatically.

You might code with one model, summarize research with another, translate using a third, and design visuals with a fourth. All from one chat screen.

We’re moving toward a world where you’re not using “ChatGPT” or “Gemini” as single entities, but rather a network of specialized models working together, hidden under a single, simple interface.

Closing Thoughts

Model routing is what makes today’s AI assistants feel alive and versatile. It’s the reason they can:

- Code like a developer

- Write like a novelist

- Design like an artist

- Research like an analyst

Whether it’s HuggingChat, ChatGPT, Claude, or Gemini, the principle remains the same. It’s not one model doing everything. It’s many models, each an expert in its field, working together intelligently to give you the best result possible.

Try it out HuggingChat here: https://huggingface.co/chat/