Everyone Is Calling Everything an AI Agent. Here Is What Actually Is One.

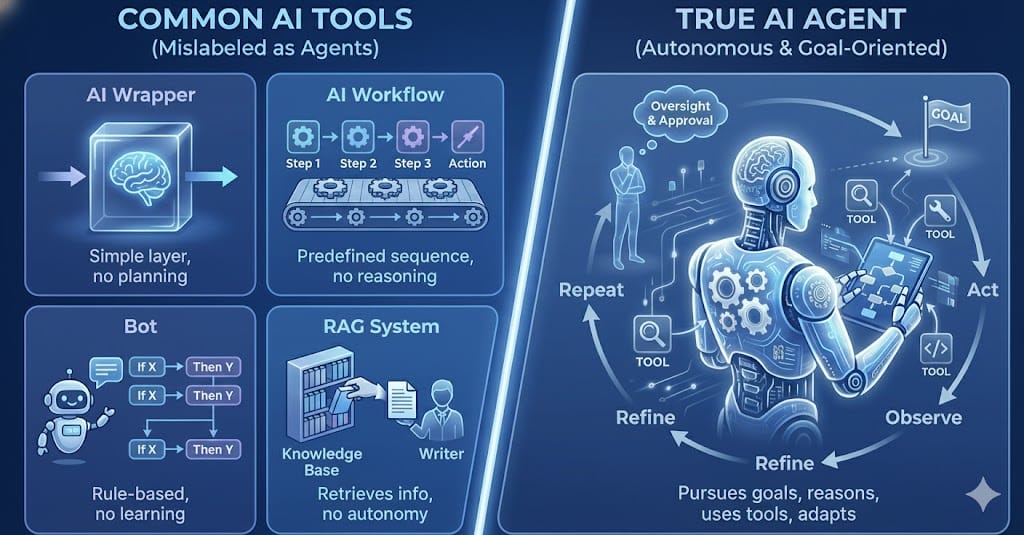

As we step into 2025, one of the most overused and misunderstood words in AI is “agent.” Every new product, SaaS tool, or startup pitch seems to describe itself as an AI agent. In reality, most of these systems are not agents at all. They are wrappers, workflows, bots, or retrieval systems with a new label.

This post aims to clearly explain the difference between AI wrappers, workflows, bots, RAG systems, and true AI agents. The goal is not to diminish any of these approaches. Each has its own place. The problem starts when we blur the boundaries and oversell capabilities.

Let us break them down one by one.

AI Wrappers

An AI wrapper is the simplest and most common AI product today.

At its core, it is a thin layer around a large language model or a foundation model. The wrapper provides a user interface, a prompt template, and sometimes basic input validation or formatting. The intelligence comes almost entirely from the underlying model.

What it looks like in practice

Examples include:

- A tool that takes text input and returns a summary

- A translation app that calls an LLM API

- A marketing copy generator with predefined prompts

- A resume or cover letter generator

The flow is straightforward:

User input goes to the model and the model response is returned to the user.

There is no planning, no decision making, no memory across sessions, and no adaptation based on outcomes. If you send the same input, you will usually get the same type of output.

What it is not

An AI wrapper does not decide what to do next.

It does not decompose problems.

It does not evaluate its own output.

It does not act autonomously.

Wrappers are useful, cost effective, and often sufficient. They just are not agents.

AI Workflows

AI workflows combine multiple steps into a predefined sequence. Tools like Zapier, Make, Gumloop, and similar automation platforms fall into this category.

Here, AI is used as one component within a larger pipeline.

What it looks like in practice

Example workflow:

- A user submits a support ticket

- The ticket text is sent to an LLM to classify urgency

- Based on the classification, the workflow routes it to a specific Slack channel

- A templated response is sent back to the user

Another example:

- New lead enters a CRM

- LLM generates a personalized email

- Email is sent automatically

- Lead is tagged in the system

The sequence is defined upfront. The AI does not decide the structure of the workflow. It only fills in specific steps.

Key characteristics

- Deterministic execution path

- Predictable outcomes

- No long term memory

- No goal seeking behavior

Workflows are powerful and scalable. They reduce manual work. But they do not reason or adapt. Calling them agents creates unrealistic expectations.

Bots

Bots existed long before modern LLMs. Most traditional chatbots are built using decision trees, rules, or scripted flows.

Even today, many so called AI chatbots still rely heavily on this structure.

What it looks like in practice

Example:

- User asks about pricing

- Bot matches intent to “pricing”

- Bot returns a predefined answer

If you ask the same question tomorrow, next week, or next year, the answer is identical.

Some bots add light NLP or LLM powered intent detection, but the core logic remains rule driven.

Limitations

- No understanding beyond predefined intents

- No learning from interaction outcomes

- No ability to handle novel situations well

Bots are reliable for narrow use cases like FAQs or simple customer support. They are not intelligent systems and definitely not agents.

RAG Systems

Retrieval Augmented Generation, or RAG, is often misunderstood as agency. It is not.

RAG exists to solve a specific limitation of language models: lack of up to date or private knowledge.

What it looks like in practice

Example:

- You index internal documents, PDFs, or knowledge bases

- User asks a question

- Relevant chunks are retrieved from a vector database

- The retrieved content is passed to the LLM

- The LLM generates an answer grounded in that data

This is how internal Q and A assistants, enterprise search tools, and document chat systems work.

What RAG adds

- Access to fresh or proprietary information

- Reduced hallucination

- Grounded responses

What RAG does not add

- Decision making

- Autonomy

- Planning

- Self correction

RAG improves accuracy, not intelligence. It is an enhancement to generation, not agency.

AI Agents

This is where the definition matters.

An AI agent is a system that can pursue a goal over time, decide what actions to take, use tools, observe the results, and adjust its behavior accordingly.

The execution path is not fully predefined. The system reasons about what to do next.

Core components of an AI agent

- Model

Usually an LLM that performs reasoning, planning, and reflection. - Tools

APIs, databases, browsers, code execution environments, or internal systems the agent can act upon. - Memory or knowledge base

Short term memory for context and long term memory for learning across interactions. - Human in the loop

Oversight, approval, correction, and escalation when the agent reaches uncertainty or risk.

What it looks like in practice

Example: AI research agent

- Goal: Produce a competitive analysis of a market

- The agent decides to search the web

- It evaluates sources and discards low quality ones

- It notices missing data and performs follow up searches

- It synthesizes findings into a structured report

- A human reviews and approves the final output

Another example: Autonomous operations agent

- Goal: Resolve cloud infrastructure alerts

- The agent inspects logs

- Runs diagnostic commands

- Identifies likely root cause

- Proposes or executes a fix

- Escalates to a human if confidence is low

In both cases, the agent is not following a fixed script. It is reasoning, acting, observing, and iterating.

Key characteristics of agents

- Non deterministic execution

- Goal oriented behavior

- Tool use with feedback loops

- Ability to handle novel situations

- Designed for reliability through constraints, monitoring, and human oversight

Agents are harder to build, harder to evaluate, and riskier to deploy. That is why true agents are still rare compared to wrappers and workflows.

Why the Confusion Exists

The term “agent” sounds powerful. It signals autonomy and intelligence. As a result, it is often used as a marketing label rather than a technical description.

But confusing users harms trust. A workflow that fails is expected. An agent that fails can cause real damage if users assume more intelligence than exists.

Clear definitions help everyone:

- Builders choose the right architecture

- Users set correct expectations

- Teams deploy systems safely

Final Thought

Not every product needs an AI agent. In fact, most do not.

Wrappers solve simple problems efficiently.

Workflows automate predictable processes.

Bots handle repetitive interactions.

RAG systems improve accuracy and grounding.

Agents should be reserved for problems that require reasoning, adaptation, and sustained goal pursuit.

If we use the word “agent,” we should mean it.