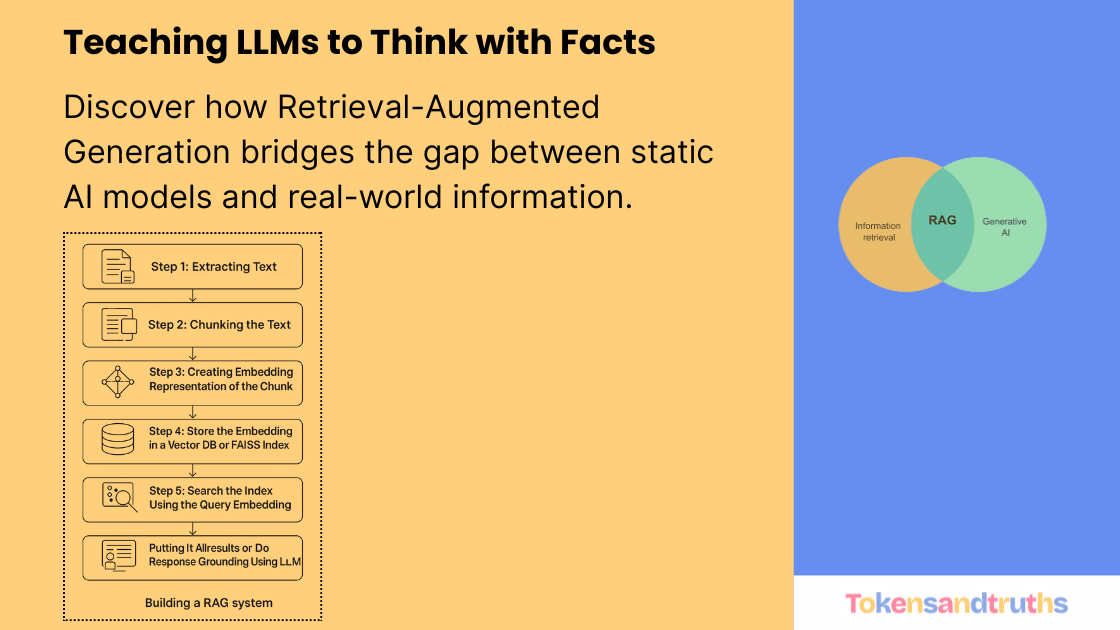

Building a RAG (Retrieval Augmented Generation) System from Scratch

Large Language Models (LLMs) like ChatGPT have taken the world by storm, with ChatGPT alone surpassing 800 million users. It’s no surprise that people now ask LLMs for everything from trivia to technical explanations.

But there’s a catch.

Even though these models are incredibly capable, they are trained on static datasets. Ask something recent or domain-specific, and you’ll often get a confident but outdated answer.

So how do LLMs still manage to answer day-to-day factual questions?

That’s where RAG (Retrieval Augmented Generation) comes in.

RAG bridges the gap between static model knowledge and live, factual data, letting LLMs retrieve relevant information and then generate accurate responses based on it.

Why RAG Matters

LLMs can hallucinate, which means they sometimes invent facts.

For companies that need reliable answers from internal data, that’s unacceptable.

RAG solves this by grounding the model’s responses in retrieved content.

It’s the best of both worlds: factual accuracy and generative fluency.

For example:

- “What’s our 2024 refund policy?” → pulled from your internal docs.

- “Which product feature caused the most support tickets?” → retrieved from your logs.

The RAG Workflow

Here’s what a simple RAG pipeline looks like:

- Extract text from sources (PDF, DOCX, HTML, etc.)

- Chunk the text into smaller parts

- Embed those chunks as numerical vectors

- Store embeddings in a vector database (for example, FAISS or Pinecone)

- Search the store using a query embedding

- Rerank or ground the results using an LLM

Let’s walk through each step.

1. Extracting Text

LLMs understand numbers, not raw files.

So the first step is extracting text from your documents.

Libraries like langchain, beautifulsoup, pypandoc, or llamaindex make this easy.

For this demo, let’s use the Wikipedia API.

import requests

def fetch_wikipedia_page(title):

title = requests.utils.quote(title)

url = f"https://en.wikipedia.org/w/api.php?action=query&prop=extracts&explaintext=true&titles={title}&format=json"

response = requests.get(url, headers={"User-Agent": "Mozilla/5.0"})

response.raise_for_status()

data = response.json()

text = ""

for page_id in data["query"]["pages"]:

text += "\n" + data["query"]["pages"][page_id]["extract"]

return textExample:

text = fetch_wikipedia_page("OpenAI")print(text[:500])

2. Chunking the Text

Documents can be huge, far larger than an embedding model can handle.

Chunking splits long text into smaller, semantically meaningful parts.

Common strategies:

- Fixed-length chunking (by token or character count)

- Paragraph chunking

- Semantic chunking (based on meaning boundaries)

👉 Read more: Weaviate’s Context Engineering Guide

Example:

def chunk_text(text, chunk_size=500, overlap=50):

words = text.split()

chunks = []

for i in range(0, len(words), chunk_size - overlap):

chunks.append(" ".join(words[i:i + chunk_size]))

return chunks3. Creating Embeddings

Each chunk is converted into an embedding, a vector that represents meaning.

You can use open-source models like SentenceTransformers or APIs like Cohere.

from sentence_transformers import SentenceTransformer

EMBEDDING_MODEL = "sentence-transformers/multi-qa-mpnet-base-dot-v1"

def get_embeddings(texts):

model = SentenceTransformer(EMBEDDING_MODEL)

return model.encode(texts, convert_to_numpy=True).tolist()Example:

embeddings = get_embeddings(["OpenAI develops AI models.", "FAISS enables similarity search."])

print(len(embeddings), len(embeddings[0]))4. Storing Embeddings in a Vector Database

Embeddings are numeric arrays. To search efficiently, store them in a vector database.

Options include FAISS, Pinecone, Weaviate, or Milvus.

Here’s a minimal FAISS example:

import faiss

import numpy as np

def create_faiss_index(embeddings, index_name):

dim = len(embeddings[0])

index = faiss.IndexFlatL2(dim)

index.add(np.array(embeddings).astype("float32"))

faiss.write_index(index, index_name)

return index

def load_index(index_name):

return faiss.read_index(index_name)

5. Searching the Index

Now embed the user’s query and find similar chunks.

import faiss

from sentence_transformers import SentenceTransformer

EMBEDDING_MODEL = "sentence-transformers/multi-qa-mpnet-base-dot-v1"

def search(query, index_name, top_k=5):

index = faiss.read_index(index_name)

model = SentenceTransformer(EMBEDDING_MODEL)

query_emb = model.encode([query], convert_to_numpy=True).astype("float32")

distances, indices = index.search(query_emb, top_k)

return [(int(indices[0][i]), float(distances[0][i])) for i in range(top_k)]Example:

results = search("Who founded OpenAI?", "wiki_index.faiss")

print(results)6. Reranking and Response Grounding

Even relevant-looking results can be off.

We can improve accuracy by reranking or by having the LLM ground its answer in the retrieved context.

from openai import OpenAI

OPENAI_MODEL = "gpt-4o-mini"

OPENAI_API_KEY = "your_api_key"

prompt = """

You are a helpful assistant. Answer the question based on the context below.

Question: {question}

Context:

{context}

Provide a concise, accurate answer.

"""

client = OpenAI(api_key=OPENAI_API_KEY)

def answer_question(question, context):

formatted = prompt.format(question=question, context=context)

response = client.responses.create(input=formatted, model=OPENAI_MODEL)

return response.output_textPutting It All Together

You now have a simple but complete RAG pipeline:

- Fetch text

- Chunk it

- Generate embeddings

- Store them in FAISS

- Search by similarity

- Use an LLM to generate grounded answers

Full code here → github.com/iam-bk/rag

Closing Thoughts

RAG is one of the most practical ways to make LLMs truly useful in the real world.

By combining retrieval and generation, you can build systems that are:

- Factually accurate

- Context-aware

- Always up to date

Whether you are building an internal chatbot, knowledge assistant, or custom AI search engine, RAG is the foundation for reliable and intelligent AI applications